Memory safety is the first step, not the last, towards secure software

Julia Андрэй / Unsplash

The U.S. government and technology giants alike are urging developers to replace memory-unsafe languages like C and C++ with modern, memory-safe languages like Rust to prevent whole classes of vulnerabilities. Will it be enough?

The U.S. government has declared war on memory-unsafe languages.

U.S. Cybersecurity and Infrastructure Security Agency (CISA) director Jen Easterly kicked off National Coding Week with a blog post in which she called out the C and C++ programming languages for being prone to vulnerabilities and called on developer training organizations to emphasize secure design with memory-safe programming languages moving forward.

"Memory unsafe code is everywhere now, and it’s full of holes that have given attackers the reach and scale to hold our systems for ransom, access our personal information, and steal our nation’s business and security secrets," Easterly said. "We can develop the best cybersecurity defenses in the world, but until we make headway on the issue of software insecurity, we will remain at unnecessary levels of risk."

Tech companies have also rallied around finding ways to eliminate memory-safety vulnerabilities. Google revealed in 2022 that its focus on adopting memory-safe languages such as Rust, Java and Kotlin led to a reduction in vulnerable code for Android along with a commensurate drop in the proportion of flaws related to memory safety. Microsoft has also turned to Rust to tackle memory safety issues within Windows, which have accounted for 60-70% of vulnerabilities in the operating system over the last five years. (The company has also reported a 5-15% performance boost following the switch to Rust, too, which shows that safety isn’t the language’s only selling point.)

Even Consumer Reports, the non-profit reviewer and consumer advocate, has weighed in, arguing that adopting memory-safe languages will lead to more security for end users. "While developers using memory-unsafe languages can attempt to avoid all the pitfalls of these languages, this is a losing battle, as experience has shown that individual expertise is no match for a systemic problem,” the organization concluded in its report.

Any language better than C++

Much of the advocacy for memory safety has less to do with pushing developers towards a particular language than it does pushing them away from languages like C and C++. Experts told README that the security of programs written in those languages—and other languages that don’t promise memory safety—depends on knowledgeable developers armed with an arsenal of bug-finding tools.

Google, for example, is not shy in advocating for languages other than C++.

"It’s clear that the C++ ecosystem, in general, cannot be made secure with any level of investment," a Google spokesperson told README. "Google and many others have invested for decades in sanitizers, fuzzers, custom hardware support features, libraries, compiler analysis improvements and a number of other proposals that have never made it past research prototypes."

A recent vulnerability in the WebP image format exploited by NSO Group to deploy its Pegasus spyware on a target iPhone offers a prime example of the limitations of such tools. “Fuzzing,” which OWASP defines as “finding implementation bugs using malformed/semi-malformed data injection in an automated fashion,” failed to detect the flaws in the associated libwebp library. Yet the spyware company was able to find those problems and develop an exploit for them.

Some programming languages address the memory safety problem by taking control away from the developer. These languages employ a technique called garbage collection that automatically manages a program’s memory while it runs. This approach works across a variety of domains—it’s used by scripting languages like Python, Ruby and Lua; languages used for app development like Swift and Kotlin; and the popular Go programming language—but in performance-sensitive domains the overhead associated with a garbage collector can be a deal-breaker.

That’s why Rust is the current favorite to replace code written in C and C++. It promises memory safety not through the use of a garbage collector, but through a strictly enforced system of “ownership” that allows it to determine how memory should be managed at compile time. This approach can offer performance on par with C and C++ without requiring developers to manage memory themselves—or exposing users of software written in Rust to the same vulnerabilities. (Assuming, of course, developers don’t bypass these checks via Rust’s “unsafe” mechanism.)

These languages all improve security by focusing on memory management, runtime complexity and undefined behavior—three areas that can lead to vulnerabilities. Statically typed languages—which includes Rust, Kotlin and Go—enforce variable types and assignments at compile time, allowing more granular error checking and static analysis. Rust and Kotlin in particular have looked to address the tricky problems posed by null values, which are widely used in programming to represent the lack of a value but have been called the billion-dollar mistake because of the sheer number of bugs they’ve caused, and many of these modern languages require developers to handle potential errors in their program’s execution instead of ignoring them.

"All these languages are trying to ... make themselves more secure—I don't think there's any language (development team) saying security doesn't matter," Joel Marcey, director of technology at the Rust Foundation, told README. "They are limited, especially if they're older languages, by ... backwards compatibility and they don't want to ruin what made these languages great to begin with."

Admired, but not popular

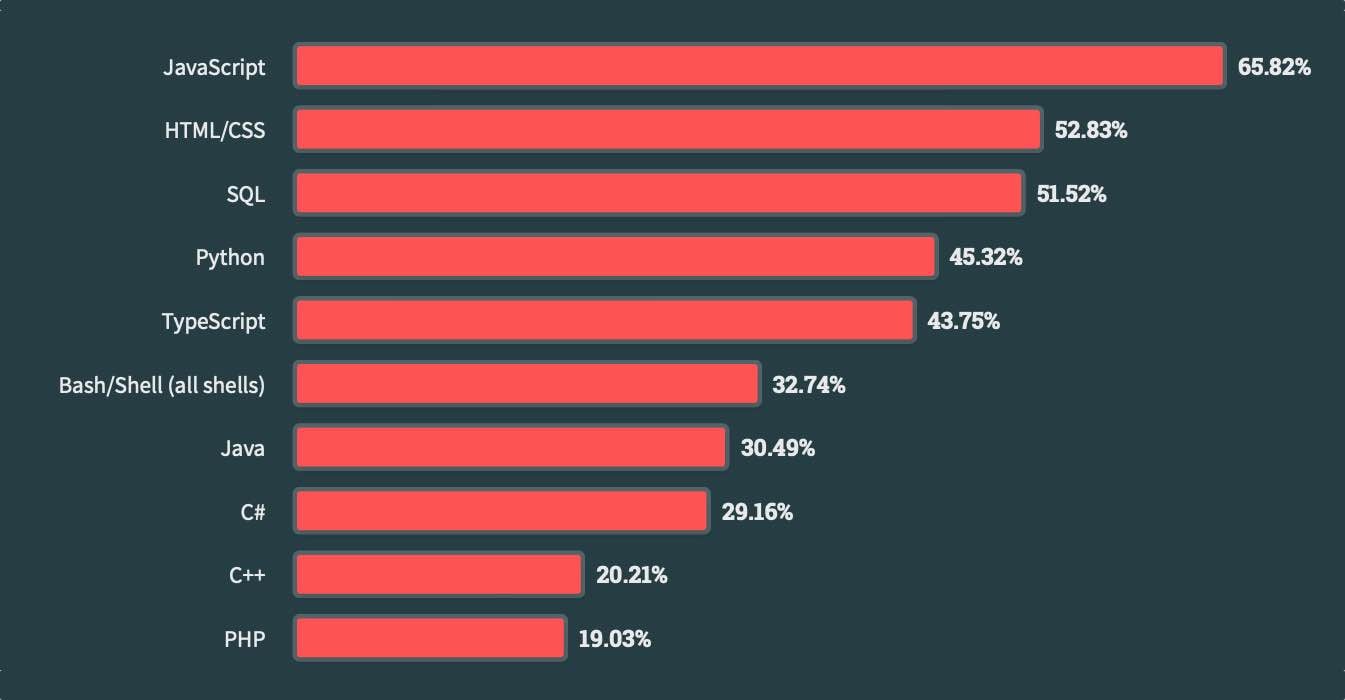

While most developers have heard about these languages, however, few use them in their daily work. JavaScript, Python, and Java top the list of common programming languages (HTML/CSS and SQL are not typically considered programming languages), with 64%, 49%, and 31% of developers, respectively, regularly using those languages, according to Stack Overflow's annual developer survey. The newer memory-safe languages are far behind: While Python and Java have memory safety features, Go is the 12th most common language, while Rust ranks 14th and Kotlin ranks 15th.

Source: Stack Overflow Developer Survey 2023

Yet, developers and companies are looking at memory-safe languages to reduce the incidence of vulnerabilities in applications and services. Most developers are looking to shift to the newer languages, with Rust topping the list of "admired" languages, defined as one that a developer would like to use again, according to the Stack Overflow survey. (It was previously declared the “most loved” programming language for seven consecutive years; Stack Overflow changed the category for 2023.)

Businesses are looking to adopt programming languages that enforce security as well, said Tim Jarrett, vice president of strategic product management at Veracode, a software-security services firm.

"More companies are looking at their language choice with a lens of security in mind," he said. "We hear from a fair number of customers who are looking for things like support for new languages that come along—not exclusively, but with definitely a strong bias—toward understanding what those languages will do for them from a security perspective."

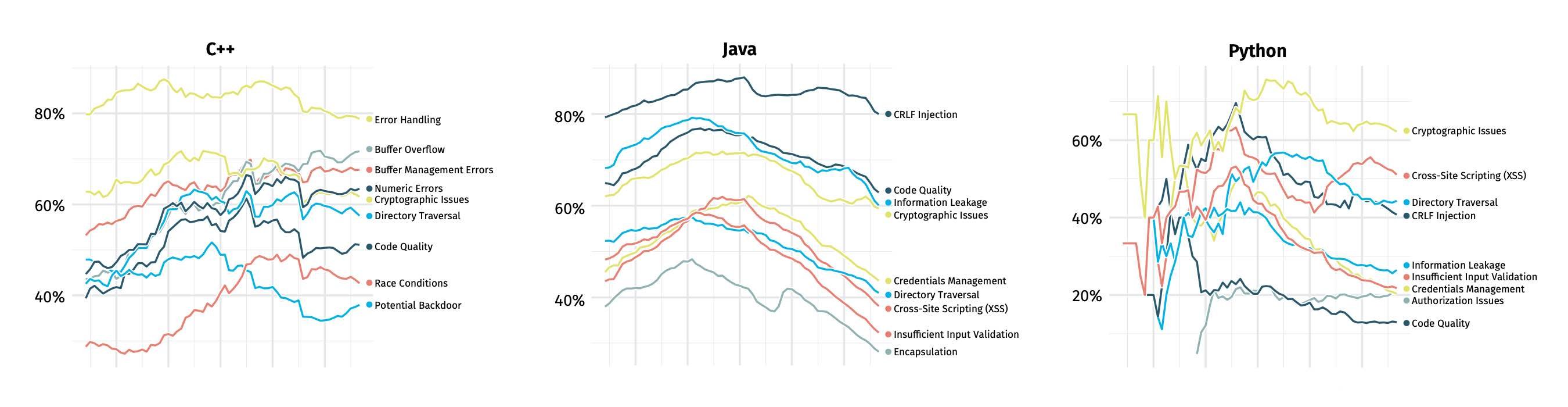

The top vulnerabilities discovered in C++ code analyzed by Veracode's service in 2022, for example, included error handling, buffer overflows and buffer management errors—the latter two issues both falling into the category of memory safety, according to Veracode's annual State of Software Security Report. Meanwhile, Java and Python programs vetted by Veracode did not see memory management issues at all.

Not the end of vulnerabilities

Yet, software written in those languages still suffered from significant vulnerabilities—just of different types. Injection attacks, code quality, cross-site scripting and cryptographic issues were the most common weaknesses found in Java and Python programs, underscoring that adopting modern memory-safe languages will not necessarily solve the issue of vulnerabilities in general.

Source: Veracode's State of Software Security, Volume 12 report

In fact, of the 99 most searched-for vulnerabilities in 2022, memory management errors accounted for only a quarter of the issues (24%), coming in second after injection vulnerabilities, such as SQL injection and cross-site scripting, which accounted for 39%, according to cybersecurity training platform Hack The Box. Developers who failed to consider security when designing their software, a broad category dubbed "Insecure Design," accounted for 16% of the top-99 flaws.

Most vulnerabilities can't be solved with a better programming language, however.

In fact, of the dozen vulnerabilities identified by CISA as those most exploited by attackers, none—zero—are memory-safety issues. Injection vulnerabilities, path traversal and improper authentication are all represented, but memory-management issues—such as use-after-free and buffer-overflow vulnerabilities—are not. Of the most "stubborn" software weaknesses—defined as those that have remained in the top-25 list of weaknesses for the last five years—only a third are issues with memory management, according to MITRE. Untrusted input and poor design are the other two major categories, similar to what Hack The Box found.

Insecure design issues and injection vulnerabilities can be tackled, but through education and scanning technologies—not necessarily in the programming language. Developers can still, for example, compile Rust with unsafe code, essentially working around all the security layers built into its compiler. Even code written entirely in safe Rust or other memory-safe languages can rely on flawed logic that leads to vulnerabilities; there is no silver bullet.

Yet, education takes time and developers are spending more than half their time on new code, improvements to old code and maintenance, according to a 2020 FOSS Contributor Survey. While 12% of their time was spent on bug reports, only 3% of their time was spent specifically on security.

For now, eliminating the two-thirds of vulnerabilities caused by memory-management issues should be a priority, because there is a clear path to that goal, the Rust Foundation's Marcey told README.

"If we can solve 70% of vulnerabilities, I think that's a great start," he said. "I think with memory safety, there's a clear line towards solving those problems through the choice of programming language."

And once memory-safety issues are exorcised from their software, developers can spend more time on finding and fixing on the other vulnerabilities that continue to haunt their code.